Open Refine Data Cleaning

Introduction

Data cleaning is an important part of the scientific data management and curation process and involves activities such as identification and correction of spelling errors (for example on scientific names), mapping data to agreed controlled vocabularies (e.g. ISO country names), setting correct references to external files (e.g. digital images), as well as re-arranging data so that they comply with standards required for specific data processing steps (e.g. imports into the JACQ collection management system at the BGBM).

The BGBM scientific data workflows are based on the assumption that scientists are cleaning their data themselves to meet the BGBM data formats and standards. To support this important step of the scientific process, we provide an open installation of OpenRefine (formerly known as Google Refine) on a BGBM server at http://services.bgbm.org/ws/OpenRefine/.

!! Please note that the BGBM OpenRefine server is only intended for the use as a tool for working on your data. It does not offer functions for storing data permanently and safely. We will however not delete any data without notice !!

Like Microsoft Excel, OpenRefine operates primarily on tabular data with rows representing a measurement, occurrence record, or specimen for example and columns representing specific attributes (e.g. collectors, locality, longitude, latitude). Unlike Microsoft Excel, OpenRefine focusses specifically on functions and operations which are particularly useful for cleaning and arranging scientific data. This includes, for example, the automated detection and merging of (potential) duplicate data items such as collector names, which have been captured using slightly different spellings but are referring to the same persons or person teams. The BGBM BDI team is constantly adding functions to the OpenRefine server installation, which are particularly useful in the context of botanic collection data processing. Please contact us for further information (email).

(Very) first steps

OpenRefine is extremely powerful and we will definitely not be able to compete with dozens of already existing excellent manuals and tutorial videos. Please refer to the Resources section for further information. Here, we would like to guide you through the very first steps with OpenRefine using the example of a BGBM Collection Data Form:

- Open your web browser and navigate to the BGBM OpenRefine installation at http://services.bgbm.org/ws/OpenRefine/.

- Press the “Choose File/Browse”-Button and select a CDF-File of your choice.

- Press “Next”.

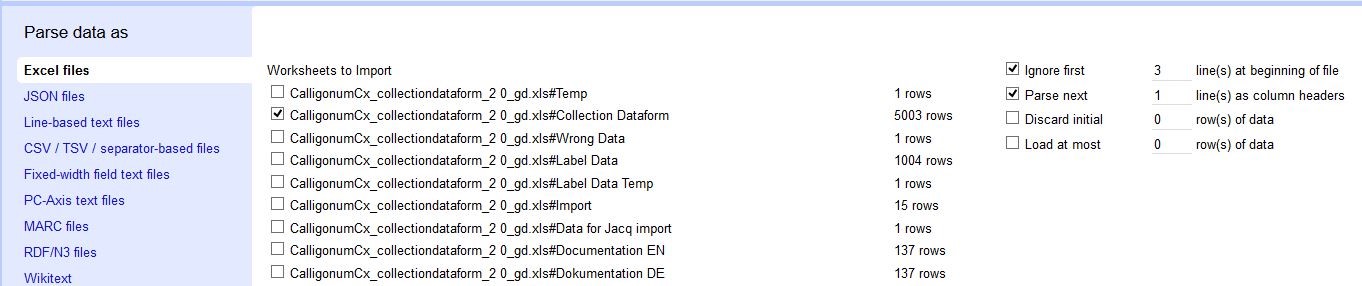

- You will now be greeted with a first preview of your data. Since your CDF-File consisted of several (Excel) worksheets, OpenRefine needs to know, which of them need to be considered for importing data. In this case, de-select all worksheets except the collection dataform worksheet (see fig. 1). OpenRefine also needs to know, which row contains the relevant header of your worksheet. For the CDF you will have to “ignore first 3 line(s)” and “parse next 1 line(s)”.

- Finally, press the “create project” button and you are ready to go.

Fig.1: selection of worksheets and header row.

E-Mesh Extension

Overview

The e-mesh extension is a OpenRefine plugin for managing and validating botanical specimen data. It provides specialized tools for data quality checking, field normalization, and enrichment through integration with the JACQ herbarium management system. The extension supports two primary data standards: CDF (Collection Data Form) and JACQ import files. Further data standards can be added as required.

This section provides a high-level overview of the extension's architecture, components, and workflows. For detailed information about specific subsystems, see:

Client-side UI components: Client-Side UI Extensions Server-side integration: Server-Side Components Validation rules and standards: Configuration and Standards Complete workflow guides: Data Workflows

System Architecture

The e-mesh extension operates as a three-tier system within OpenRefine, consisting of client-side JavaScript UI enhancements, server-side Java data processing, and configuration-driven validation rules.

Core Data Standards

The extension enforces data quality through two constraint schemas that define required fields, data types, controlled vocabularies, and other validation rules.

CDF Standard Fields (Subset)

The CDF standard defines specimen documentation requirements:

| Example Categories | Example Fields | Example Validation |

|---|---|---|

| Collection Data | Collectors, Collection Date from, Collection Date to | Required, ISO-8601 dates |

| Geographic | Latitude, Longitude, Geocode Method | Required, decimal degrees, range validation |

| Taxonomic | Family, Genus, Specific Epithet | Required fields |

Sources

src/main/resources/rules/constraints-CDF.json (lines 1–575)

JACQ Standard Fields (Subset)

The JACQ standard focuses on herbarium specimen documentation to be imported into the JACQ herbarium management system:

| Example Categories | Example Fields | Example Validation |

|---|---|---|

| Specimen Identity | HerbNummer, collectionID, CollectionNumber | Required |

| Collection | Sammler, Datum, Datum2 | Required collector and date |

| DMS Coordinates | coord_NS, lat_degree, lat_minute, lat_second | Dependent required, 0–90° latitude |

Sources

src/main/resources/rules/constraints-JACQ.json (lines 1–137)

Major Subsystems

Column Menu Extensions

Provides custom operations accessible from column headers, organized into four functional groups:

Sources

module/scripts/project/data-table-column-header/column-header-extend-menu.js (lines 1–1532)

Ressources

The OpenRefine website (http://openrefine.org/) provides a very nice compilation of tutorial videos, which will help you to understand the basic concepts of data exploration, cleaning, and transformation. There is also a written manual in the form of a wiki-site with common use cases and links to external tutorials in different languages (https://github.com/OpenRefine/OpenRefine/wiki).